The social impact of development practices on dating: A Phd Thesis on the Tinder Algorithm

Review of the development choices of Tinder's matching system and its social impact on gender,

and more broadly on couple formation, through a qualitative analysis.

- Researcher: Dr. Jessica Pidoux

- University: EPFL, Lausanne, Switzerland

- Date: 06 October, 2021

Tinder is an American dating app present in 190 countries. It's mainly adopted by young educated heterosexual people 18-35 years old.

Tinder's algorithm, as with many other apps, is protected with patents so how they develop their system is not published. Our study is based on the patent and on machine learning conferences where Tinder engineers presented material.

Data Collected for the research

Tinder’s data comes from Tinder, from the user’s activity, their profile page, but also from Facebook accounts.

Every like that the user gives on Facebook, groups they belong to, or the topics they follow are taken into account. As well as the Tinder's user activity, and how much time spent online.

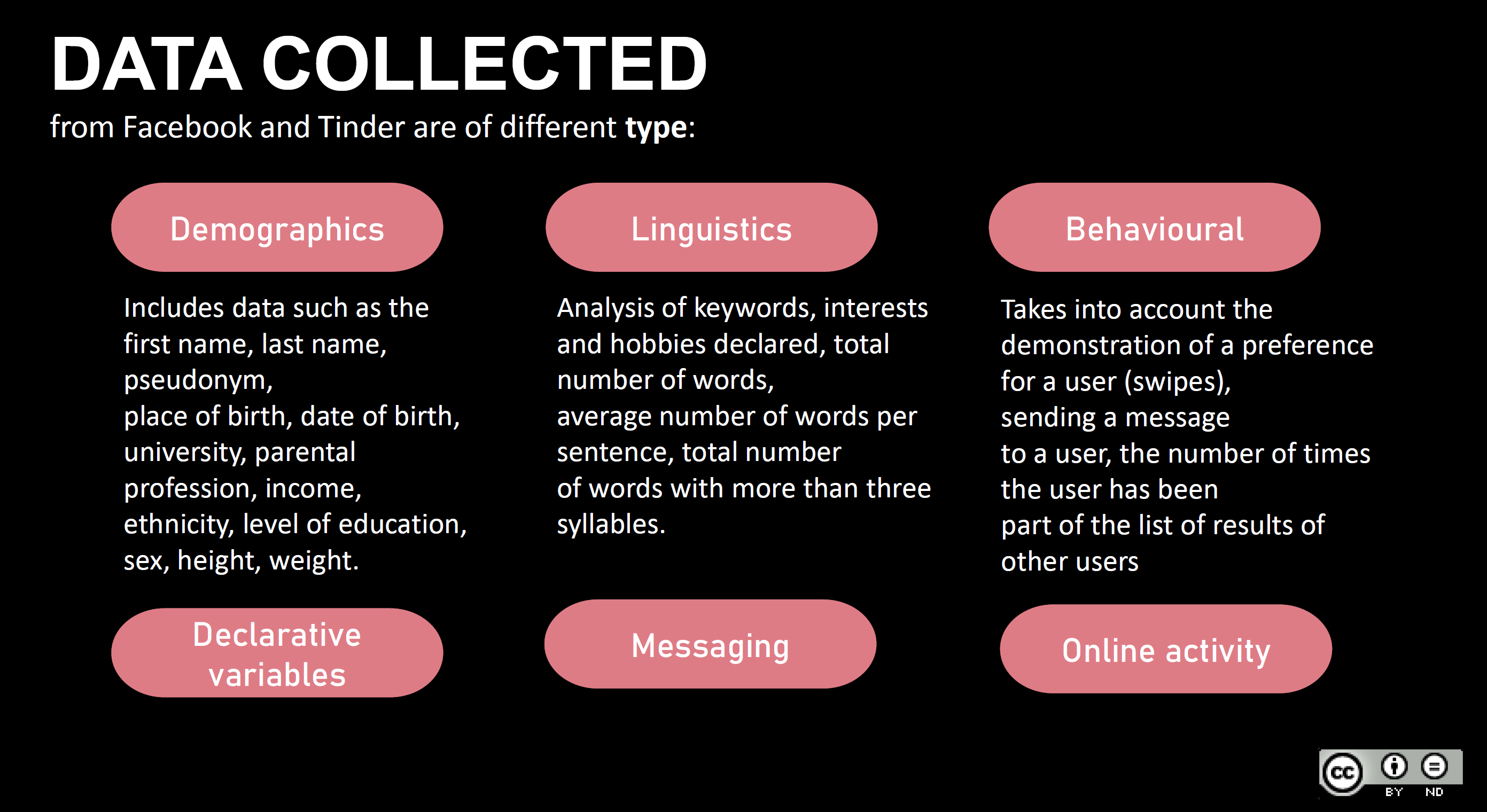

The data is classified into 3 categories:

- Demographics: information found in a profile page

- Linguistics : the keywords answered by an individual on their profile, their messages, the length of their sentences.

- Behavioural: mainly based on swipes. How often do you open the app? How many passes do you give to a profile? And this is also a useful for infer in your preferences, according to the profile that you just.

Social Impact

Profiling users from different sources without users necessarily knowing about their data being used. We have a big data fallacy. While data does give you information, the fallacy of big data is that more data doesn't mean you will get “proportionately” more information.

This information is all sensitive and personal data, which has to be taken into consideration. European laws and Swiss law protects the user's identity and privacy.

The feature selection, which encompasses the dimensions that describe the user on a dating app, can be a major source of bias. For example, deciding to measure the level of education of a user will have an impact on the socioeconomic level at which they are compared to other users. This can lead to possible discriminatory results. Who selected these features is also an issue.

The weighting of the features, how important each feature is, also has an impact. If education level or income are weighted more, then it means that not everybody will have the same opportunities to find somebody. The system learns that if you have a low level of income, then you will be matched with the same low level income users and not with other income level users.

Metrics

Each user gets a score according to different metrics:

- Physical attractiveness

- I.Q.

- Readability

- Nervousness

- Similarity

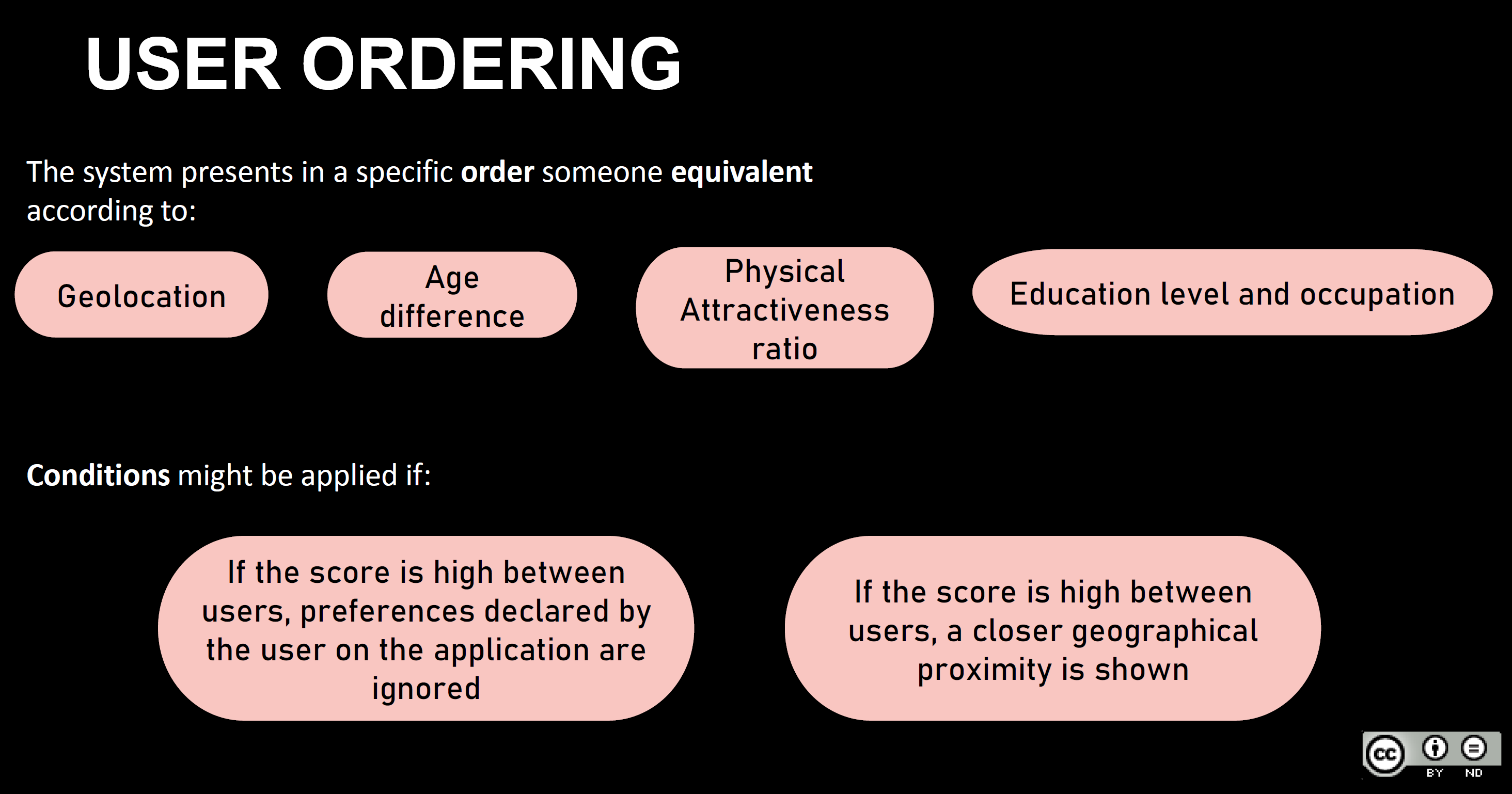

Tinder also has a specific order to present the results on the app. It means not everybody gets the first position. This is not random and not only based on geolocation or preferences. What we see on the interface is different from what is on the code.

For example, the age difference between two users, their physical attractiveness ratio and their education level are more important features. However, if the system estimates that a user is better with another person outside of their declared preferences in the app, then they are going to be introduced to that other person.

Social Impact

When metrics are established, based on similarity, , as a lot of recommendation systems do, diversity is reduced. People are matched based on an estimation of beauty and socioeconomic level, and they are not exposed to a diversity of other types of people.

The ordering creates a hierarchical organization of the profile, based on popularity and attractiveness. The more likes on the top of a user’s list, the more they are viewed by others, and more probabilities of getting likes from other people. This discriminates against those who don’t fit the “standards”.

The patent doesn't talk about homosexual relationships, even though Tinder and a lot of dating apps are very generalist. They cover all sexual orientations, but based on a binary option, female, male or both.

The patent also favours a patriarchal model: when a man in a relationship has a dominant position in respect to a woman with inferior power, money or attainment. On Tinder younger women are presented to older men with a higher education level.

That means that if you're a woman, you don't have the same opportunities to meet all the types of men that are in the app and the other way around as well. This is an example of human biases and algorithmic biases combined. In the past perhaps we assumed a lot of young women liked older men. The system will reproduce that model because it works for the majority, but it will also amplify the effect because Tinder takes the mean of the likes of a user history. And then it presents the same type of profiles to all.

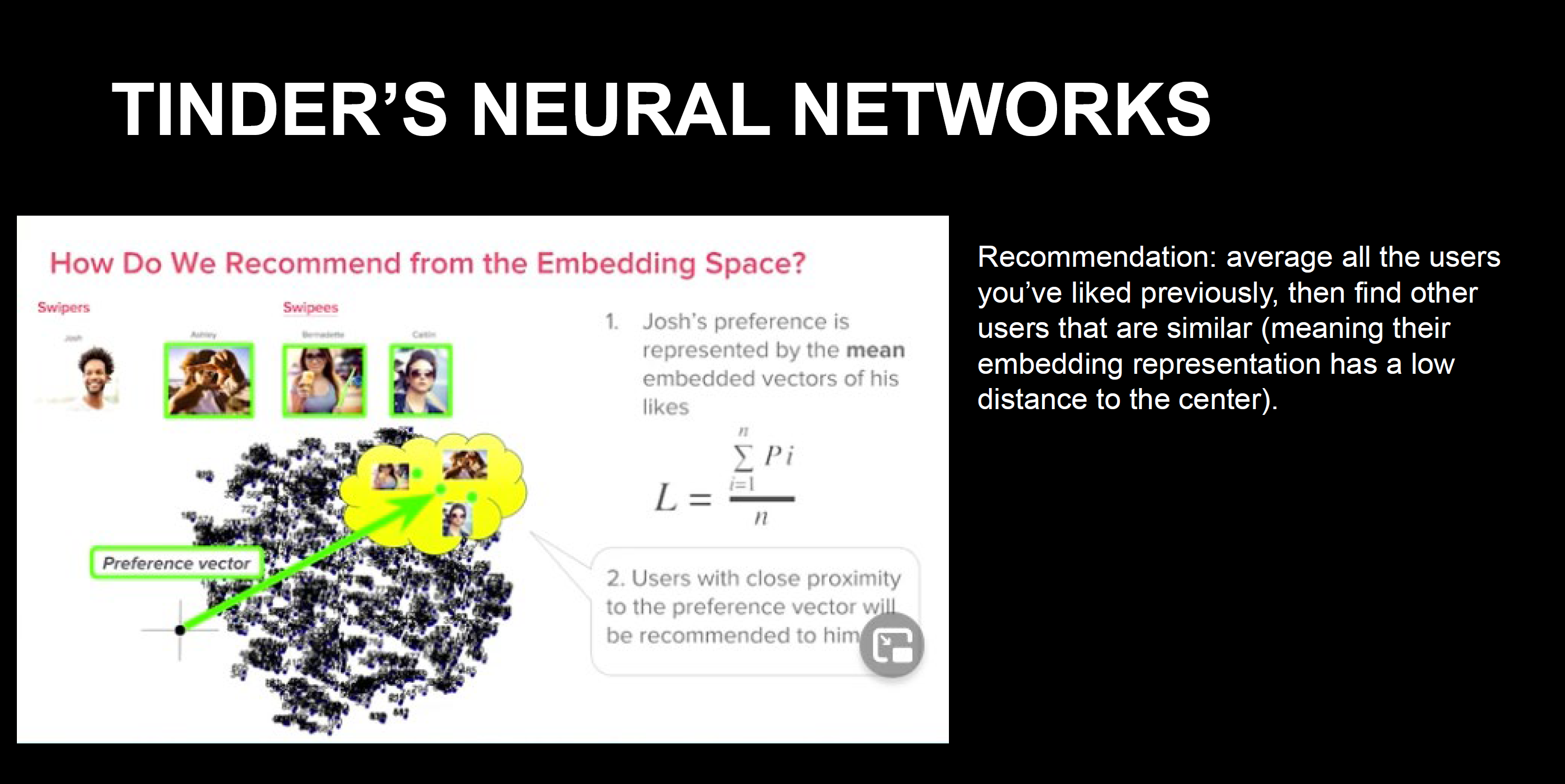

Neural networks

Now Tinder's algorithms uses neural networks, but that does not diminish the problem of ordering. There are thousands of dimensions, meaning that there are thousands of individual values associated with each user.

When implementing neural networks the interpretability of the results obtained is extremely difficult. Each value in the clusters describing users probably does not have a meaningful and legible interpretation for a human. How to explain and justify why some users are more or less favoured in the app?

Recommendations by similarity of preferences based in past experiences can trap users in a filter bubble. It reduces diversity in the future experiences.

Conclusion

The development choices have real and direct consequences in society: reinforcing a type of couple, a type of preferences by similarity while reducing the probability of finding different users. The development choices seek first efficiency and profit.

These choices also affect, from the end-user interface, how users evaluate themselves and others(fast speed physical elimination) as they learn and adopt how the system works. But not everyone get matches or dates!

References

- « Online Dating Quantification Practices: A Human-Machine Learning Process » 2021, Jessica Pidoux

- The qualitative analysis of Tinder's algorithm patents. « Toi et moi, une distance calculée. Les pratiques de quantification algorithmiques sur Tinder. » Text in French: 2019, Jessica Pidoux

- The analysis of development practices when defining variables for user representation on dating apps “Declarative variables in online dating: a mixed-method analysis of a mimetic-distinctive mechanism” : 2021, Pidoux Jessica, Kuntz Pascale et Gatica-Perez Daniel , ACM Conference on Computer Supported Cooperative Work and Social Computing (CSCW)

- MacLeod, Caitlin, and Victoria McArthur. “The construction of gender in dating apps: an interface analysis of Tinder and Bumble” Feminist Media Studies 19, no. 6 (August 18, 2019): 822–40

Bio

Jessica Pidoux is a sociologist and holds a PhD in Digital Humanities from the École Polytechnique Fédérale de Lausanne (EPFL) and a master's degree in Sociology of Communication and Culture from the Université de Lausanne. Her research combines different methods and also different perspective from sociology and from data science.

Jessica is personally involved in privacy protection with PersonalData.IO, a non-profit organisation that makes our personal data rights practicable and builds governance models with civil society.